3D formats for Blender: PBR and glTF

Tags: posts, tutorial, blender,

This came up in a few other tutorials and felt worth making a separate post for. I am absolutely not an expert on these things, I just couldn't find any relevant, concise documentation so this is my best understanding. This post assumes a basic familiarity with Blender materials and UV maps.

PBR: Physically Based Rendering

This is a standard approach to rendering 3D materials, used by the glTF Format. It's the format most downloaded/exported models use and what will be expected if you upload materials to places like Blenderkit. This is also the format Blender uses for texture painting. I can't find a simple definition anywhere but what it generally looks like in Blender is a bunch of UV mapped image textures piped into a Principled BDSF Shader.

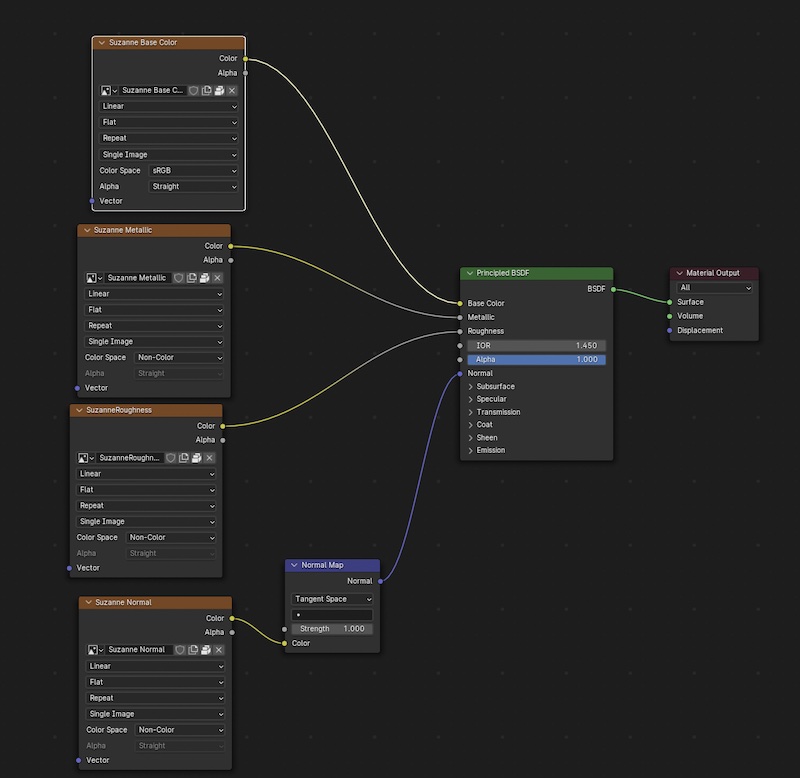

Specifically, we have a Base Image piped into Color, associated images piped into Roughness, Metallic, and Alpha, and a Normal image piped in via a Bump Map.

Here's an example PBR model, created with texture painting in Blender:

glTF Format

Graphics Library Transmission Format, formerly known as WebGL TF, is a standard file format for 3D models. In my experience it's the best format for exporting/loading models created for game engines like Unity or Unreal, and is the format used for importing into Ren'py.

glTF files are imported via "File > Import > GLTF (.glb, .gltf)" and exported via "File > Export > GLTF (.glb, .gltf)". I do not understand the difference between .gltf and .glb, I just use whatever I'm given >.>

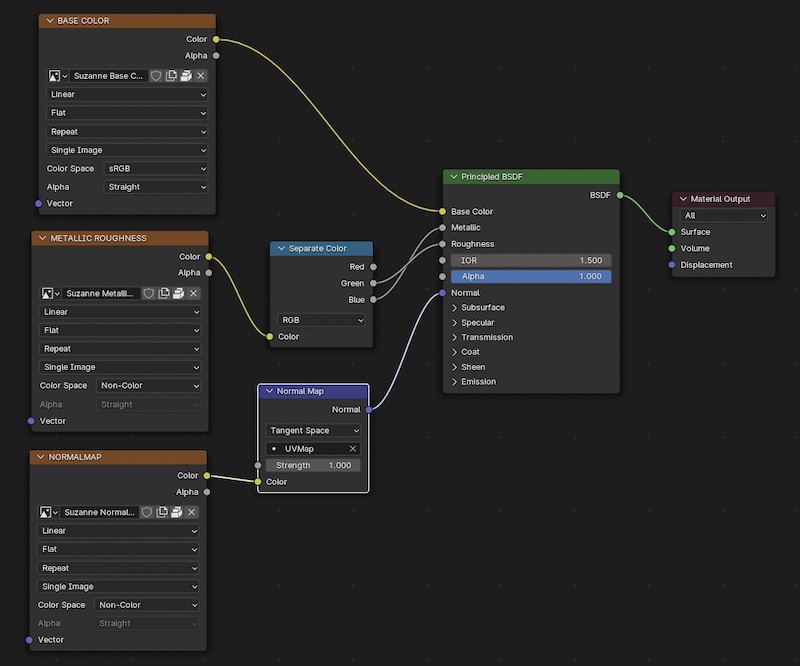

I have only used glTF files after they're imported to Blender, at which point they usually look similar to the PBR format above. However instead of separate Metallic and Roughness images there's a single "METALLIC ROUGHNESS" image which stores Metallic in the blue channel and Roughness in the green channel.

Here's the PBR model above, exported as glTF and then re-imported to Blender:

I haven't been able to find a list of the full range of node types that show up in imported glTF objects, but some I've observed:

- Mapping nodes used to transform the coordinates sent to image textures

- Alpha of the BASE IMAGE sent to the alpha input of the Principled BDSF via some math nodes

- EMISSION image sent to the emmission input of the Principled BDSF

- DISPLACEMENT image sent to the displacement input of the Material Output node

- Sending the Vertex Color to the color input of the Principled BDSF instead of an image texture

- OCCLUSION image sent to a group called "glTF Material" that afaict doesn't do anything because Blender doesn't support it.

I haven't had to export any very complex Blender files into glTF but here's the documentation on what is possible. From a brief experiment the exporter doesn't handle procedural textures well. You generally have to stick to flat colours, image textures, and vertex colours for your textures and not use any modifiers on your meshes etc.